Static binaries for a C++ application

ArangoDB is a multi-model database written in C++. It is a sizable application with an executable size of 38MB (stripped) and quite some library dependencies. We provide binary packages for Linux, Windows and MacOS, and for Linux we cover all major distributions and their different versions, which makes our build and delivery pipeline extremely cluttered and awkward. At the beginning of this story, we needed approximately 12 hours just to build and publish a release, if everything goes well. This is the beginning of a tale to attack this problem. Read more

Milestone ArangoDB 3.4:

ArangoSearch – Information retrieval with ArangoDB

For the upcoming ArangoDB 3.4 release we’ve implemented a set of information retrieval features exposed via new database object `View`. The `View` object is meant to be treated as another data source accessible via AQL and the concept itself is pretty similar to a classical “materialized” view in SQL.

While we are still working on completing the feature, you can already try our retrieval engine in the Milestone of the upcoming ArangoDB 3.4 released today. Read more

ArangoJS 6.0.0 released: Load Balancing, Automated Failover and completely written in TypeScript

Version 6.0.0 of the JavaScript driver arangojs is now available (Find it on GitHub).

This is a major release that introduces a small number of breaking changes so make sure to check out the arangojs changelog before upgrading. The most significant additions in this release are support for load balancing and automated failover as well as improved browser and TypeScript support. Read more

ArangoDB 3.3: DC2DC Replication, Encrypted Backup

Just in time for the holidays we have a nice present for you all - ArangoDB 3.3. This release focuses on replication, resilience, stability and thus on general readiness for your production small and very large use cases. There are improvements for the community as well as for the Enterprise Edition. We sincerely hope to have found the right balance between them.

In the Community Edition there are:

- Easier server-level replication

- A resilient active/passive mode for single server instances with automatic failover

- RocksDB throttling for increased guaranteed write performance

- Faster collection and shard creation in the cluster

- Lots of bug fixes (most of them have been backported to 3.2)

In the Enterprise Edition there are:

- Datacenter to datacenter replication for clusters

- Encrypted backup and restore

That is, this is all about improving replication and resilience. For us, the two new exciting features are datacenter to datacenter replication and the resilient active-passive mode for single-servers.

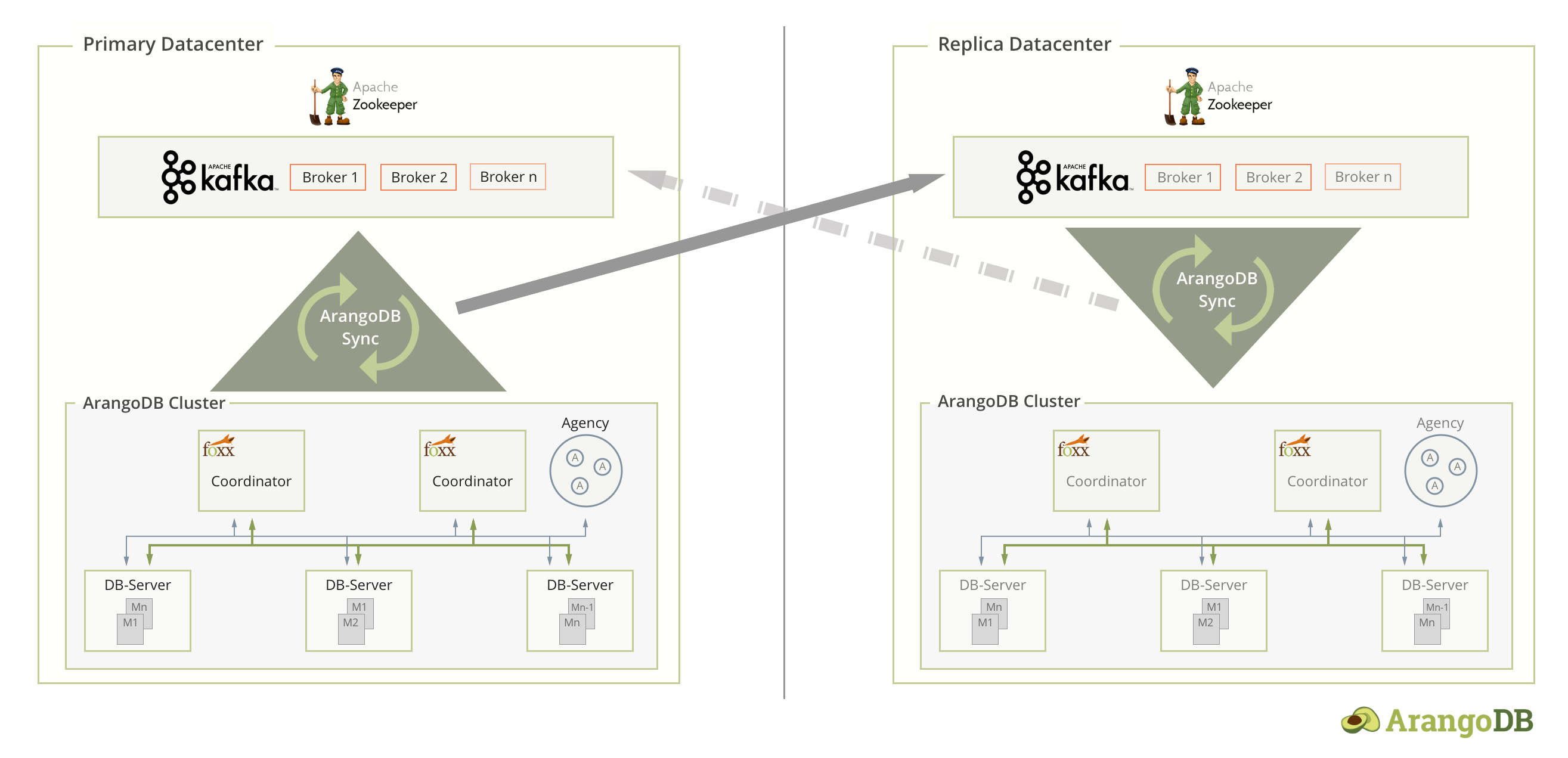

Datacenter to datacenter replication

Every company needs a disaster recovery plan for all important systems. This is true from small units like single processes running in some container to the largest distributed architectures. For databases in particular this usually involves a mixture of fault-tolerance, redundancy, regular backups and emergency plans. The larger a data store, the more difficult it is to come up with a good strategy.

Therefore, it is desirable to be able to run a distributed database in one datacenter and replicate all transactions to another datacenter in some way. Often, transaction logs are shipped over the network to replicate everything in another, identical system in the other datacenter. Some distributed data stores have built-in support for multiple datacenter awareness and can replicate between datacenters in a fully automatic fashion.

ArangoDB 3.3 takes an evolutionary step forward by introducing multi-datacenter support, which is asynchronous datacenter to datacenter replication. Our solution is asynchronous and scales to arbitrary cluster sizes, provided your network link between the datacenters has enough bandwidth. It is fault-tolerant without a single point of failure and includes a lot of metrics for monitoring in a production scenario.

Read more on the Datacenter to Datacenter Replication and follow generic installation instructions.

This is a feature available only in the Enterprise Edition.

Server-level replication

We have had asynchronous replication functionality in ArangoDB since release 1.4. But setting it up was admittedly too hard. One major design flaw in the existing asynchronous replication was that the replication is for a single database only.

Replicating from a leader server that has multiple databases required manual fiddling on the follower for each individual database to replicate. When a new database was created on the leader, one needed to take action on the follower to ensure that data for that database got actually replicated. Replication on the follower also was not aware of when a database was dropped on the leader.

This is now fixed in 3.3. In order to set up replication on a 3.3 follower for all databases of a given 3.3 leader, there is now the so-called `globalApplier`. It has the same interface as the existing `applier`, but it will replicate from all database on the leader and not just a single one.

As a consequence, server-global replication can now be set up permanently with a single JavaScript command or API call.

A resilient active/passive mode for single server instances with automatic failover

While it was always possible to set up two servers and connect them via asynchronous replication, the replication setup was not straightforward (see above), and it also did not handle automatic failover. In case of the leader having died, one needed to have some machinery in place to stop replication on the follower and make it the leader. ArangoDB did not provide this machinery, and left it to client applications to solve the failover problem.

With 3.3, this has become much easier. There is now a mode to start two arangod instances as a pair of connected servers with automatic failover.

The two servers are connected via asynchronous replication. One of the servers is the elected leader, and the other one is made a follower automatically. At startup, the two

servers race for leadership. The follower will automatically start replication from the leader for all databases, using the server-global replication (see above).

When the leader goes down, this is automatically detected by an agency instance, which

is also started in this mode. This instance will make the previous follower stop its replication and make it the new leader.

The follower will automatically deny all read and write requests from client applications. Only the replication is allowed to access the follower's data until the follower becomes a new leader.

The arangodb starter does support starting two servers with asynchronous replication and failover out of the box, making the setup even easier.

The arangojs driver for JavaScript, GO, PHP Java drivers for ArangoDB are also in the making to support automatic failover in case the currently used server endpoint responds with HTTP 503. Read more details on the Java driver.

Encrypted backup

This feature allows to create an encrypted backup using arangodump. We use AES256 for the encryption. The encryption key can be read from a file or from a generator program. It works in single server and cluster mode. Together with the encryption at rest this allows to keep all your sensible data encrypted whenever it is not in memory.

Here is an example for encrypted backup:

arangodump --collection "secret" dump --encryption.keyfile ~/SECRET-KEYAs you can see, in order to create an encrypted backup, simply add the --encryption.keyfile option when invoking arangodump. Needless to say, restore is equally easy using arangorestore.

The key must be exactly 32 bytes long (this is a requirement of the AES block cipher we are using). For details see the documentation in the manual.

Note that encrypted backups can be used together with the already existing RocksDB encryption-at-rest feature, but they can also be used for the MMFiles engine, which does not have encryption-at-rest.

This is a feature available only in the Enterprise Edition.

RocksDB throttling

While throttling may sound bad at first, the RocksDB throttling is there for a good reason. It throttles write operations to RocksDB in the RocksDB storage engine, in order to prevent total stalls. The throttling is adaptive, meaning that it automatically adapts to the write rate. Read more about RocksDB throttling.

Faster shard creation in cluster

Creating collections is what all ArangoDB users do. It's one of the first steps carried out. So it should be as quick as possible.

When using the cluster, users normally want resilience, so replicationFactor is set to at least 2. The number of shards is often set to pretty high values (collections with 100 shards).

Internally this will first store the collection metadata in the agency, and then the assigned shard leaders will pick up the change and will begin creating the shards. When the shards are set up on the leader, the replication is kicked off, so every data modification will not only become effective on the leader, but also on the followers. This process has got some shortcuts for the initial creation of shards in 3.3.

Conclusion

The entire ArangoDB team is proud to release version 3.3 of ArangoDB just in time for the holidays! We hope you will enjoy the upgrade. We invite you to take ArangoDB 3.3 for a spin and to let us know what you think via our Community Slack channel or hacker@arangodb.com. We look forward to your feedback!

ArangoDB 3.3 Beta Release – New Features and Enhancements

It is all about improving replication. ArangoDB 3.3 comes with two new exciting features: data-center to data-center replication for clusters and a much improved active-passive mode for single-servers. ArangoDB 3.3 focuses on replications and improvements in this area and provides a much better user-experience when setting up a resilient single-servers with automatic failover.

This beta release is feature complete and contains stability improvements with regards to the recent milestone 1 and 2 of ArangoDB 3.3. However, it is not meant for production use, yet. We will provide ArangoDB 3.3 GA after extensive internal and external testing of this beta release. Read More

ArangoDB | Milestone2: ArangoDB 3.3 New Data Replication

We’re pleased to announce the availability of the Milestone 2 of ArangoDB 3.3. There are a number of improvements, please consult the changelog for a complete overview of changes.

This milestone release contains our new and improved data replication engine. The replication engine is at the core of every distributed ArangoDB setup: whether it is a typical master/slave setup between multiple single servers or a full-fledged cluster. During the last month we:

- redesigned the replication protocol to be more reliable

- refactored and modernized the internal infrastructure to better support continuous asynchronous replication

- added a new global asynchronous replication API, to allow you to automatically and continuously mirror an entire ArangoDB single-instance (master) onto another one (or more)

- added support for automatic failover from a master server to one of his replica-slaves, if the master server becomes unreachable

Milestone 1 ArangoDB 3.3: Datacenter to Datacenter Replication

Every company needs a disaster recovery plan for all important systems. This is true from small units like single processes running in some container to the largest distributed architectures. For databases in particular this usually involves a mixture of fault-tolerance, redundancy, regular backups and emergency plans. The larger a data store, the more difficult is it to come up with a good strategy.

Therefore, it is desirable to be able to run a distributed database in one datacenter and replicate all transactions to another datacenter in some way. Often, transaction logs are shipped over the network to replicate everything in another, identical system in the other datacenter. Some distributed data stores have built-in support for multiple datacenter awareness and can replicate between datacenters in a fully automatic fashion.

This post gives an overview over the first evolutionary step of ArangoDB towards multi-datacenter support, which is asynchronous datacenter to datacenter replication.

ArangoDB 3.2: RocksDB, Pregel, Fault Tolerant Foxx, Satellite Collections

We are pleased to announce the release of ArangoDB 3.2. Get it here. After an unusually long hackathon, we eliminated two large roadblocks, added a long overdue feature and integrated an interesting new one into this release. Furthermore, we’re proud to report that we increased performance of ArangoDB on average by 35%, while at the same time reduced the memory footprint compared to version 3.1. In combination with a greatly improved cluster management, we think ArangoDB 3.2 is by far our best work. (see release notes for more details)

One key goal of ArangoDB has always been to provide a rock solid platform for building ideas. Our users should always feel safe to try new things with minimal effort by relying on ArangoDB. Todays 3.2 release is an important milestone towards this goal. We’re excited to release such an outstanding product today.

RocksDB

With the integration of Facebook's RocksDB, as a first pluggable storage engine in our architecture, users can now work with as much data as fits on disk. Together with the better locking behavior of RocksDB (i.e., document-level locks), write intensive applications will see significant performance improvements. With no memory limit and only document-level locks, we have eliminated two roadblocks for many users. If one chooses RocksDB as the storage engine, everything, including indexes will persist on disk. This will significantly reduce start-up time.

With the integration of Facebook's RocksDB, as a first pluggable storage engine in our architecture, users can now work with as much data as fits on disk. Together with the better locking behavior of RocksDB (i.e., document-level locks), write intensive applications will see significant performance improvements. With no memory limit and only document-level locks, we have eliminated two roadblocks for many users. If one chooses RocksDB as the storage engine, everything, including indexes will persist on disk. This will significantly reduce start-up time.

See this how-to on “Comparing new RocksDB and mmfiles engine” to test the new engine for your operating system and use case.

Pregel

Distributed graph processing was a missing feature in ArangoDB’s graph toolbox. We’re willing to admit that, especially since we managed to fill this need by implementing the Pregel computing model.

With PageRank, Community Detection, Vertex Centrality Measures and further algorithms, ArangoDB can now be used to gain high-level insights into the hidden characteristics of graphs. For instance, you can use graph processing capabilities to detect communities. You can then use the results to shard your data efficiently to a cluster and thereby enable SmartGraph usage to its full potential. We’re confident that with the integration of distributed graph processing, users will now have one of the most complete graph toolsets available in a single database.

Test the new pregel integration with this Community Detection Tutorial and further sharpen advanced graph skills with this new tutorial about Using SmartGraphs in ArangoDB.

Fault-Tolerant Foxx Services

Many people already enjoy using our Foxx JavaScript framework for data-centric microservices. Defining your own highly configurable HTTP routes with full access to the ArangoDB core on the C++ level can be pretty handy. In version 3.2, our Foxx team completely rewrote the management internals to support fault-tolerant Foxx services. This ensures multi-coordinator clusters will always keep their services in sync, and new coordinators are fully initialized, even when all existing coordinators are unavailable.

Test the new fault-tolerant Foxx yourself or learn Foxx by following the brand new Foxx tutorial.

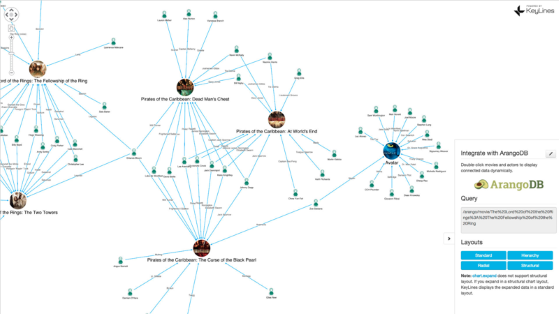

Powerful Graph Visualization

Managing and processing graph data may not be enough, causing visualizing insights to be important. No worries. With ArangoDB 3.2, this can be handled easily. You can use the open-source option via arangoexport to export the data and then import it into Cytoscape (check out the tutorial).

Or you can just plug in the brand new Keylines 3.5 via Foxx and install an on-demand connection. With this option, you will always have the latest data visualized neatly in Keylines without any export/import hassle. Just follow this tutorial to get started with ArangoDB and Keylines.

Read-Only Users

To enhance basic user management in ArangoDB, we added Read-Only Users. The rights of these users can be defined on database and collection levels. On the database level, users can be given administrator rights, read access or denied access. On the collection level, within a database, users can be given read/write, read only or denied access. If a user is not given access to a database or a collection, the databases and collections won’t be shown to that user. Take the tutorial about new User Management.

We also improved geo queries since this is becoming more important to our community. With geo_cursor, it’s now possible to sort documents by distance to a certain point in space (Take the tutorial). This makes queries simple like, “Where can I eat vegan in a radius of one mile around Times Square?” We plan to add support for other geo-spatial functions (e.g., polygons, multi-polygons) in the next minor release. So watch for that.

ArangoDB 3.2 Enterprise Edition: More Room for Secure Growth

The Enterprise Edition of ArangoDB is focused on solving enterprise-scale problems and secure work with data. In version 3.1, we introduced SmartGraphs to bring fast traversal response times to sharded datasets in a cluster. We also added auditing and enhanced encryption control. Download ArangoDB Enterprise Edition (forever free evaluation).

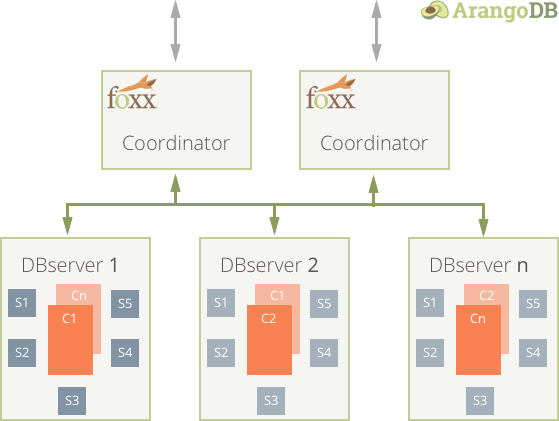

Working closely with one of our larger clients, we further explored and improved an idea we had about a year ago. Satellite Collections is the exciting result of this collaboration. It’s designed to enable faster join operations when working with sharded datasets. To avoid expensive network hops during join processing among machines, one has ‘only’ to find a solution to enable joins locally.

With Satellite Collections, you can define collections to shard to a cluster, as well as set collections to replicate to each machine. The ArangoDB query optimizer knows where each shard is located and sends requests to the DBServers involved, which then execute the query locally. The DBservers will then send the partial results back to the Coordinator which puts together the final result. With this approach, network hops during join operations on sharded collections can be avoided, hence query performance is increased and network traffic reduced. This can be more easily understood with an example. In the schema below, collection C is sharded to multiple machines, while the smaller satellites (i.e., S1 - S5) are replicated to each machine, orbiting the shards of C.

Use cases for Satellite Collection are plentiful. In this more in-depth blog post, we use the example of an IoT case. Personalized patient treatment based on genome sequencing analytics is another excellent example where efficient join operations involving large datasets, can help improve patient care and save infrastructure costs.

Security Enhancements

From the very beginning of ArangoDB, we have been concerned with security. AQL is already protected from injections. By using Foxx, sensitive data can be contained within in a database, with only the results being passed to other systems, thus minimizing security exposure. But this is not always enough to meet enterprise scale-security requirements. With version 3.1, we introduced Auditing and Enhanced Encryption Control and with ArangoDB 3.2, we added even more protection to safeguard data.

Encryption at Rest

With RocksDB, you can encrypt the data stored on disk using a highly secure AES algorithm. Even if someone steals one of your disks, they won’t be able to access the data. With this upgrade, ArangoDB takes another big step towards HIPAA compliance.

Enhanced Authentication with LDAP

Normally, users are defined and managed in ArangoDB itself. With LDAP, you can use an external server to manage your users. We have implemented a common schema which can be extended. If you have special requirements that don’t fit into this schema, please let us know.

Conclusion & You

The entire ArangoDB team is proud to release version 3.2 of ArangoDB -- this should not be a surprise considering all of the improvements we made. We hope you will enjoy the upgrade. We invite you to take ArangoDB 3.2 for a spin and to let us know what you think. We look forward to your feedback!

Download ArangoDB 3.2

The new SatelliteCollections Feature of ArangoDB

With the new Version 3.2 we have introduced a new feature called SatelliteCollections. This post explains what this is all about, how it can help you, and explains a concrete use case for which it is essential.

Background and Overview

Join operations are very useful but can be troublesome in a distributed database. This is because quite often, a join operation has to bring together different pieces of your data that reside on different machines. This leads to cluster internal communication and can easily ruin query performance. As in many contexts nowadays, data locality is very important to avoid such headaches. There is no silver bullet, because there will be many cases in which one cannot do much to improve data locality.

One particular case in which one can achieve something, is if you need a join operation between a very large collection (sharded across your cluster) and a small one, because then one can afford to replicate the small collection to every server, and all join operations can be executed without network communications.

ArangoDB 3.2: Enhanced GraphQL Sync

Just in time for the upcoming 3.2.0 release, we have updated the graphql-sync module for compatibility with graphql-js versions 0.7.2, 0.8.2, 0.9.6 and 0.10.1. The graphql-sync module allows developers to implement GraphQL backends and schemas in strictly synchronous JavaScript environments like the ArangoDB Foxx framework by providing a thin wrapper around the official GraphQL implementation for JavaScript.

As a long-term database solution, ArangoDB is committed to API stability and avoids upgrades to third-party dependencies that would result in breaking changes. This means ArangoDB will continue to bundle the graphql-js 0.6.2 compatibility version of graphql-sync.

Get the latest tutorials,

blog posts and news:

Thanks for subscribing! Please check your email for further instructions.

Skip to content

Skip to content