ArangoSync: A Recipe for Reliability

Estimated reading time: 18 minutes

A detailed journey into deploying a DC2DC replicated environment

When we thought about all the things we wanted to share with our users there were obviously a lot of topics to choose from. Our Enterprise feature; ArangoSync was one of the topics that we have talked about frequently and we have also seen that our customers are keen to implement this in their environments. Mostly because of the secure requirements of having an ArangoDB cluster and all of its data located in multiple locations in case of a severe outage.

This blog post will help you set up and run an ArangoDB DC2DC environment and will guide you through all the necessary steps. By following the steps described you’ll be sure to end up with a production grade deployment of two ArangoDB clusters communicating with each other with datacenter to datacenter replication.

All of the best practices that we use during our day-to-day operations regarding encryption and secure authentication have been used while writing this blog post and every step in the setup will be explained in detail, so there will be no need to doubt, research or ponder about which options to use and implement in any situation; Your home lab, your production grade database environment and basically anywhere you want to run a deployment like this.

A note of importance however is that the ArangoSync feature including the used encryption at rest are Enterprise features that we don’t offer in our Community version of ArangoDB. If you don’t have an available Enterprise license for this project, you can download an evaluation version that has all functionality at: https://www.arangodb.com/download-arangodb-enterprise/

That's a lot of words as an introduction but what actually is ArangoSync?

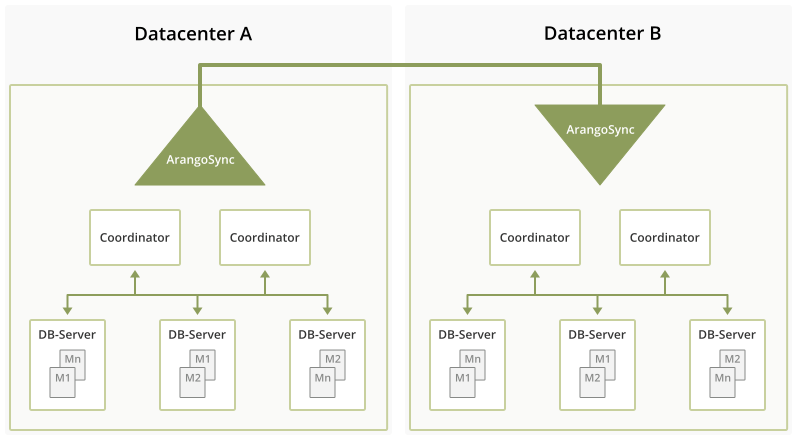

ArangoSync is our Enterprise feature that enables you to seamlessly and asynchronously replicate the entire structure and content in an ArangoDB cluster in one location to a cluster in another location. Imagine different cloud provider regions or different office locations in your company.

To run successfully, ArangoSync needs two fully functioning clusters and will not be useful when you’re only running a single instance of ArangoDB. So please also consider this when you’re making any plans to change or implement your database architecture.

In the above explanation I mentioned that ArangoSync works asynchronously. What this basically means is that when a client processes and writes data into the source datacenter, it will consider the request to be complete and finished before the data has been replicated to the other datacenter. The time needed to completely replicate changes to the other datacenter is typically in the order of seconds, but this can vary significantly depending on load, network & computer capacity, so be mindful of what hardware you choose so it will have a positive and useful impact on your environment in terms of performance and suits your use case.

ArangoSync performs replication in a single direction only. That means that you can replicate data from cluster A to cluster B or from cluster B to cluster A, but never to and from both at the same time.

ArangoSync runs a completely autonomous distributed system of synchronisation workers. Once configured properly via this blog post or any related documentation, it is designed to run continuously without manual intervention from anyone.

This of course doesn’t mean that it doesn’t require any maintenance at all and as with any distributed system some attention is needed to monitor its operation and keep it secure (Think of certificate & password rotation just to name two examples).

Once configured, ArangoSync will replicate both the structure and data of an entire cluster. This means that there is no need to make additional configuration changes when adding/removing databases or collections. Any data or metadata in the cluster will be automatically replicated.

When to use it… and when not to use it

ArangoSync is a good solution in all cases where you want to replicate data from one cluster to another without the requirement that the data is available immediately in the other cluster.

If you’re still doubting whether ArangoSync is the option for you then review the following list of no’s and if they apply to you or your organization.

- You want to use bidirectional replication data from cluster A to cluster B and vice versa.

- You need synchronous replication between 2 clusters.

- There is no network connection between cluster A and B.

- You want complete control over which database, collection & documents are replicated and which ones will not be.

Okay I’m done reading the official part, now let's get started!

To start off with the first ArangoDB cluster, you will need at least 3 nodes. In this blog post, we’re of course using 6 nodes for both data centers, meaning 3 nodes per datacenter.

As an example we’re using the hypothetical locations dc1 and dc2 which can be located anywhere in the world but also in your test environment living in multiple VMs;

sync-dc1-node01

sync-dc1-node02

sync-dc1-node03

sync-dc2-node01

sync-dc2-node02

sync-dc2-node03The three nodes with “dc1” are located in the first datacenter, the three nodes with “dc2” are located in the second datacenter. To test the location can of course be any local environment that supports running six nodes at once with sufficient resources.

In this blog post, we picked Ubuntu Linux as the OS but as we’re using the .tar.gz distribution with static executables, this means that you can choose whatever Linux distribution your organization runs and that you’re comfortable with. To control the ArangoDB installation, we use systemd, so the distribution should support systemd or you have to change things for automatic restarts after a reboot.

Currently, the most recent release of ArangoDB is version 3.8.0, so all of our examples mentioning file names will be using the arangodb3e-linux-3.8.0.tar.gz binary and the following ports need to be open/accessible on each node;

- 8528 : starter

- 8529 : coordinator

- 8530 : dbserver

- 8531 : agent

- 8532 : syncmaster

- 8533 : syncworkerObviously, the process name next to the port name is for illustration purposes so you know what port belongs to which process.

We will roll out the clusters as the root user, but this is of course not necessary. In fact, our own packages create the arangodb user with the installation. It is considered good practice to keep file ownerships separate for services and should be done so in production environments. We could have used any normal user account, provided we have access to the nodes and their filesystem.

The only significant part where we need root access is to set up the systemd service. Another important prerequisite is that you have properly configured SSH access to all nodes.

Setting up ArangoDB clusters - A detailed overview

We will go through all of the detailed next steps. There are a bunch of them to follow so grab a cup of coffee or tea and sit back to work on this. As you might notice, the second half of the steps are repetitive as we’re setting up two similar clusters so we did not make a mistake to make you think you’ve misread. The settings for both clusters slightly differ from each other and therefore we need to separate the installation steps. All commands you need to follow are explained and written out in detail and can even be copied and pasted for your own future reference when you’d like to automate the installation steps in your own environment.

Extract the downloaded binary in its target location:

Assuming the archive file arangodb3e-linux-3.8.0.tar.gz is present on your local machine, we deploy it to each node in the first cluster with the following commands:

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc1-node01:/tmp

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc1-node02:/tmp

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc1-node03:/tmpTo install ArangoDB, we run the following command on all cluster nodes:

mkdir -p /arangodb/data

cd /arangodb

tar xzvf /tmp/arangodb3e-linux-3.8.0.tar.gz

export PATH=/arangodb/arangodb3e-linux-3.8.0/bin:$PATHA quick check to test the installation for functionality:

cd /arangodb

mkdir data

cd data

arangodb --starter.mode=singleThis will launch a single server on each machine on port 8529, without any authentication, encryption, or anything. You can point your browser to the nodes on port 8529 to verify that the firewall settings are correct. If this does not work and you cannot reach the UI of the database, you should stop here and debug your firewall otherwise, you are bound to run into more difficult trouble later on, for example, because the processes in your cluster cannot reach each other over the network.

Afterward, simply press Control-C and run the following to clean up:

cd /arangodb/data

rm -rf *Having tested basic functionality, let's get to the actual deployment of the cluster;

Create a shared secret for the first cluster

The different processes in the ArangoDB cluster must authenticate themselves against each other. To this end, we require a shared secret, which is deployed to each cluster machine. Here is a simple way to create such a secret on your laptop and to deploy it to each of the cluster nodes:

arangodb create jwt-secret

scp secret.jwt root@sync-dc1-node01:/arangodb/data

scp secret.jwt root@sync-dc1-node02:/arangodb/data

scp secret.jwt root@sync-dc1-node03:/arangodb/dataNote that we are using the arangodb executable from the distribution to create a secret file secret.jwt. For this to work, you have to install ArangoDB on your laptop, too. If you want to avoid this, you can simply create all the secrets and keys on one of your cluster nodes and use arangodb there.

Please keep the file secret.jwt in a safe place, possession of the file grants unrestricted superuser access to the database.

Create a CA and server keys, Then deploy them:

All communications to the database as well as all communications within an ArangoDB cluster need to be encrypted via TLS. To this end, every process needs to have a pair of a private key and a corresponding public key (aka server certificate). During the steps of this blog post, we will create a self-signed CA key pair (the public CA key is signed by its own private key) and use that as the root certificate.

We use the following commands to create the CA keys tls-ca.key (private) and tls-ca.crt (public) as well as the server key and certificate in the keyfile files. A keyfile contains the private server key as well as the full chain of public certificates. Note how we add the server names into the server certificates:

arangodb create tls ca

arangodb create tls keyfile --host localhost --host 127.0.0.1 --host sync-dc1-node01 --host sync-dc1-node02 --host sync-dc1-node03 --keyfile sync-dc1-nodes.keyfile

scp sync-dc1-nodes.keyfile root@sync-dc1-node01:/arangodb/data

scp sync-dc1-nodes.keyfile root@sync-dc1-node02:/arangodb/data

scp sync-dc1-nodes.keyfile root@sync-dc1-node03:/arangodb/dataThese commands are all executed on your local machine and deploy the server key to the cluster nodes. Keep the tls-ca.key file secure, it can be used to sign certificates, in particular, do not deploy it to your cluster! Furthermore, keep the sync-dc1-node*.keyfile files secure, since possession of them allows you to listen in to the communication with your servers.

Create an encryption key for encryption at rest:

ArangoDB can keep all the data on disk encrypted using the AES-256 encryption standard. This is a requirement for most secure database installations. To this end, we need a 32 byte key for the encryption. It can basically consist of random bytes. Use these commands to set up an encryption key:

dd if=/dev/random of=sync-dc1-nodes.encryption bs=1 count=32

chmod 600 sync-dc1-nodes.encryption

scp sync-dc1-nodes.encryption root@sync-dc1-node01:/arangodb/data

scp sync-dc1-nodes.encryption root@sync-dc1-node02:/arangodb/data

scp sync-dc1-nodes.encryption root@sync-dc1-node03:/arangodb/dataKeep the encryption key secret, because possession allows one to open a database at rest, if one can get hold of the database files in the filesystem.

Create a shared secret to use with ArangoSync:

The data center to data center replication in ArangoDB is implemented as a set of external processes. This allows for scalability and minimal impact on the actual database operations. The executable for the ArangoSync system is called arangosync and is packaged with our Enterprise Edition. Similar to the above steps, we need to create a shared secret for the different ArangoSync processes, such that they can authenticate themselves against each other.

We produce the shared secret in a way that is very similar to the one for the actual ArangoDB cluster:

arangodb create jwt-secret --secret syncsecret.jwt

scp syncsecret.jwt root@sync-dc1-node01:/arangodb/data

scp syncsecret.jwt root@sync-dc1-node02:/arangodb/data

scp syncsecret.jwt root@sync-dc1-node03:/arangodb/dataKeep the file syncsecret.jwt a secret, since its possession allows you to interfere with the ArangoSync system.

Create a TLS encryption setup for ArangoSync:

The same arguments about encrypted traffic and man-in-the-middle attacks apply to the ArangoSync system as explained above for the actual ArangoDB cluster. We choose to reuse the same CA key pair as above for the TLS certificate and key setup. For a change, we work with the same server keyfile for all three nodes.

Here we create the server keyfile, signed by the same CA key pair in tls-ca.key and tls-ca.crt:

arangodb create tls keyfile --host localhost --host 127.0.0.1 --host sync-dc1-node01 --host sync-dc1-node02 --host sync-dc1-node03 --keyfile synctls.keyfile

scp synctls.keyfile root@sync-dc1-node01:/arangodb/data

scp synctls.keyfile root@sync-dc1-node02:/arangodb/data

scp synctls.keyfile root@sync-dc1-node03:/arangodb/dataAs usual, keep the file synctls.keyfile secure, since its possession allows it to listen to the encrypted traffic with the ArangoSync system.

Set up client authentication setup for ArangoSync:

There is one more secretive thing to set up before we can hit the launch button. The two ArangoSync systems in the two data centers need to authenticate each other. Actually, the second data center (“DC B”, the replica), needs to authenticate itself with the first data center (“DC A”, the original). Since this is cross data center traffic, the authentication is done via TLS client certificates.

We create and deploy the necessary files with the following commands on your local machine:

arangodb create client-auth ca

arangodb create client-auth keyfile

scp client-auth-ca.crt root@sync-dc1-node01:/arangodb/data

scp client-auth-ca.crt root@sync-dc1-node02:/arangodb/data

scp client-auth-ca.crt root@sync-dc1-node03:/arangodb/dataKeep the file client-auth-ca.key secret, since it allows signing additional client authentication certificates. Do not store this on any of the cluster nodes.

Also, keep the file client-auth.keyfile secret, since it allows authentication with a syncmaster in either data center.

The first cluster can now be launched:

We launch the whole system by means of the ArangoDB starter, which is included in the ArangoDB distribution. We launch the starter via a systemd service file, which looks basically like the following snippet but feel free to adapt it to your needs:

[Unit]

Description=Run the ArangoDB Starter

After=network.target

[Service]

# system limits

LimitNOFILE=131072

LimitNPROC=131072

TasksMax=131072

Restart=on-failure

KillMode=process

Environment=SERVER=sync-dc1-node01

ExecStart=/arangodb/arangodb3e-linux-3.8.0/bin/arangodb \

--starter.data-dir=/arangodb/data \

--starter.address=${SERVER} \

--starter.join=sync-dc1-node01,sync-dc1-node02,sync-dc1-node03 \

--auth.jwt-secret=/arangodb/data/secret.jwt \

--ssl.keyfile=/arangodb/data/sync-dc1-nodes.keyfile \

--rocksdb.encryption-keyfile=/arangodb/data/sync-dc1-nodes.encryption \

--starter.sync=true \

--sync.start-master=true \

--sync.start-worker=true \

--sync.master.jwt-secret=/arangodb/data/syncsecret.jwt \

--sync.server.keyfile=/arangodb/data/synctls.keyfile \

--sync.server.client-cafile=/arangodb/data/client-auth-ca.crt \

TimeoutStopSec=60

[Install]

WantedBy=multi-user.targetApart from some infrastructural settings like the number of file descriptors and restart policies, the service file basically runs a single command. It refers to the starter program arangodb, which needs a few options to find all the secret files we have set up, these should be self-explanatory from what we have written above.

The network fabric of the cluster basically comes together since every instance of the starter gets told its own address (with the --starter.address option), as well as a list of all the participating starters (with the --starter.join option). We are achieving this by setting the actual server hostname in the line with Environment=SERVER=.... Then we can refer to this environment variable with the syntax ${SERVER} further down in the service file. This means that the above file has to be edited in just a single place for each individual machine, namely, you have to set the SERVER name.

Provided the above file has been given the name arango.service on your local machine, then you can deploy the service with the following commands on local machine:

scp arango.service root@sync-dc1-node01:/etc/systemd/system/arango.service

scp arango.service root@sync-dc1-node02:/etc/systemd/system/arango.service

scp arango.service root@sync-dc1-node03:/etc/systemd/system/arango.serviceYou then have to edit this file and adjust the server name, as described above. Then you launch the service with the following commands on each node in the cluster:

systemctl daemon-reload

systemctl start arangoYou can check the status of the service with:

systemctl status arangoOr investigate the live log file by running:

journalctl -flu arangoPlease note that all the data for the cluster resides in subdirectories of /arangodb/data. Every instance on each machine has a subdirectory there that contains its port in the directory name. You can find further log files in these subdirectories.

You should now be able to point your browser to port 8529 on any of the nodes. Before that, we recommend that you tell your browser to trust the tls-ca.crt certificate for server authentication. Since the public server keys are signed by the private CA key, your browser can then successfully prevent any man-in-the-middle attack.

An important step is now to change the root password, which will be empty in the beginning. You can use the UI for this.

We now set up the second cluster in a completely similar way. We simply show the commands used for that as they differ in some detail related to node names and such:

Extract the downloaded binary in its target location:

On your local machine run:

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc2-node01:/tmp

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc2-node02:/tmp

scp arangodb3e-linux-3.8.0.tar.gz root@sync-dc2-node03:/tmpThen on each machine of the second cluster:

mkdir -p /arangodb/data

cd /arangodb

tar xzvf /tmp/arangodb3e-linux-3.8.0.tar.gz

export PATH=/arangodb/arangodb3e-linux-3.8.0/bin:$PATHCreate a shared secret for the second cluster

Perform these commands on your local machine:

arangodb create jwt-secret --secret secretdc2.jwt

scp secretdc2.jwt root@sync-dc2-node01:/arangodb/data

scp secretdc2.jwt root@sync-dc2-node02:/arangodb/data

scp secretdc2.jwt root@sync-dc2-node03:/arangodb/dataWarning: Keep the file secretdc2.jwt in a safe place, possession of the file grants unrestricted superuser access to the database.

Create a CA and server keys, Then deploy them:

Note that we are using the same pair of CA keys for the TLS setup here as before during the preparation of the first cluster, so we rely on the files tls-ca.key and tls-ca.crt on your local machine. Perform these commands:

arangodb create tls keyfile --host localhost --host 127.0.0.1 --host sync-dc2-node01 --host sync-dc2-node02 --host sync-dc2-node03 --keyfile sync-dc2-nodes.keyfile

scp sync-dc2-nodes.keyfile root@sync-dc2-node01:/arangodb/data

scp sync-dc2-nodes.keyfile root@sync-dc2-node02:/arangodb/data

scp sync-dc2-nodes.keyfile root@sync-dc2-node03:/arangodb/dataKeep the file sync-dc2-nodes.keyfile secure, since possession of it allows one to listen in to the communication with your servers.

Create an encryption key for encryption at rest

This is totally parallel to what we did for the first cluster. On your local machine run:

dd if=/dev/random of=sync-dc2-nodes.encryption bs=1 count=32

chmod 600 sync-dc2-nodes.encryption

scp sync-dc2-nodes.encryption root@sync-dc2-node01:/arangodb/data

scp sync-dc2-nodes.encryption root@sync-dc2-node02:/arangodb/data

scp sync-dc2-nodes.encryption root@sync-dc2-node03:/arangodb/dataKeep the encryption key secret, because possession allows one to open a database at rest, if one can get hold of the database files in the filesystem.

Create a shared secret to use with ArangoSync:

Run the following on your local machine:

arangodb create jwt-secret --secret syncsecretdc2.jwt

scp syncsecretdc2.jwt root@sync-dc2-node01:/arangodb/data

scp syncsecretdc2.jwt root@sync-dc2-node02:/arangodb/data

scp syncsecretdc2.jwt root@sync-dc2-node03:/arangodb/dataKeep the file syncsecretdc2.jwt a secret, since its possession allows it to interfere with the ArangoSync system.

Create a TLS encryption setup for ArangoSync:

Again, we proceed exactly as for the first cluster. Do this on your local machine:

arangodb create tls keyfile --host localhost --host 127.0.0.1 --host sync-dc2-node01 --host sync-dc2-node02 --host sync-dc2-node03 --keyfile synctlsdc2.keyfile

scp synctlsdc2.keyfile root@sync-dc2-node01:/arangodb/data

scp synctlsdc2.keyfile root@sync-dc2-node02:/arangodb/data

scp synctlsdc2.keyfile root@sync-dc2-node03:/arangodb/dataAs usual, keep the file synctlsdc2.keyfile secure, since its possession allows you to listen to the encrypted traffic with the ArangoSync system.

Set up client authentication setup for ArangoSync:

Note that for simplicity, we use the same client authority CA for DC B as we did for DC A. This is not necessary but avoids a bit of confusion. On your local machine run:

arangodb create client-auth keyfile --host localhost --host 127.0.0.1 --host sync-dc2-node01 --host sync-dc2-node02 --host sync-dc2-node03 --keyfile client-auth-dc2.keyfile

scp client-auth-ca.crt root@sync-dc2-node01:/arangodb/data

scp client-auth-ca.crt root@sync-dc2-node02:/arangodb/data

scp client-auth-ca.crt root@sync-dc2-node03:/arangodb/dataMake sure to keep the file client-auth-ca.key and client--auth-par.keyfile secretly stored outside of the cluster.

Now, we’re ready to launch the second cluster:

We use a very similar service file like we did with the first cluster:

[Unit]

Description=Run the ArangoDB Starter

After=network.target

[Service]

# system limits

LimitNOFILE=131072

LimitNPROC=131072

TasksMax=131072

Restart=on-failure

KillMode=process

Environment=SERVER=sync-dc2-node01

ExecStart=/arangodb/arangodb3e-linux-3.8.0/bin/arangodb \

--starter.data-dir=/arangodb/data \

--starter.address=${SERVER} \

--starter.join=sync-dc2-node01,sync-dc2-node02,sync-dc2-node03 \

--auth.jwt-secret=/arangodb/data/secretdc2.jwt \

--ssl.keyfile=/arangodb/data/sync-dc2-nodes.keyfile \

--rocksdb.encryption-keyfile=/arangodb/data/sync-dc2-nodes.encryption \

--starter.sync=true \

--sync.start-master=true \

--sync.start-worker=true \

--sync.master.jwt-secret=/arangodb/data/syncsecretdc2.jwt \

--sync.server.keyfile=/arangodb/data/synctlsdc2.keyfile \

--sync.server.client-cafile=/arangodb/data/client-auth-ca.crt \

TimeoutStopSec=60

[Install]

WantedBy=multi-user.targetProvided the above file is named arango-dc2.service on your local machine, then you can deploy the service with the following commands on your local machine:

scp arango-dc2.service

root@sync-dc2-node01:/etc/systemd/system/arango.service

scp arango-dc2.service root@sync-dc2-node02:/etc/systemd/system/arango.service

scp arango-dc2.service root@sync-dc2-node03:/etc/systemd/system/arango.serviceThen, edit this file and adjust the server name, as described above. Then you need to launch the service with these commands on each machine in the cluster:

systemctl daemon-reload

systemctl start arangoYou can query the status of the service with:

systemctl status arangoOr investigate the live log file by running:

journalctl -flu arangoNote that all the data for the cluster resides in subdirectories of /arangodb/data. Every instance on each machine has a subdirectory there which contains its port in the directory name. You can find further log files in these subdirectories.

You should now be able to point your browser to port 8529 on any of the nodes and connect to the ArangoDB UI.

Don’t forget to change the root password for your installation. This can be easily done via the ArangoDB UI.

Enable ArangoSync synchronization and start it by using the CLI:

ArangoSync is controlled via its CLI, this CLI will be installed together with ArangoDB so there's no need to separately search for it in order to download and install it.

To configure DC to DC synchronisation from DC A to DC B, you now have to run this command on your local machine:

arangosync configure sync --master.endpoint=https://sync-dc2-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password> --source.endpoint=https://sync-dc1-node01:8532 --source.cacert=tls-ca.crt --master.keyfile=client-auth.keyfileIf you want (or need) to check if replication is running, you can run the following two commands:

arangosync get status -v --master.endpoint=https://sync-dc1-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password>

arangosync get status -v --master.endpoint=https://sync-dc2-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password>Get detailed information on running synchronization tasks:

arangosync get tasks -v --master.endpoint=https://sync-dc1-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password>

arangosync get tasks -v --master.endpoint=https://sync-dc2-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password>Momentarily stop the synchronization process:

arangosync stop sync --master.endpoint=https://sync-dc2-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password> --ensure-in-sync=trueThis will briefly stop writes to DC A until both clusters are in perfect sync. It will then stop synchronization and switch DC B back to read/write mode. You can use the switch --ensure-in-sync=false if you do not want to wait for synchronization to be ensured.

Abort synchronization:

Losing connectivity between the two separate locations because of a network or other outage will mean you need to stop synchronization entirely with the following command:

arangosync abort sync --master.endpoint=https://sync-dc2-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password>This is the command to abort the synchronization on the target side (DC B). If there is no connectivity between the clusters, then this will naturally not abort the outgoing synchronization in DC A. Therefore, it is possible that you have to additionally send a corresponding command to the syncmaster in DC A, too.

It has a slightly different syntax:

arangosync abort outgoing sync --master.endpoint=https://sync-dc1-node01:8532 --master.cacert=tls-ca.crt --auth.user=root --auth.password=<password> --target <id>The <id> will need to be replaced by the ID of the cluster in DC B. You can retrieve this ID via the arangosync get status output of DC A. There you can also tell if this is necessary, if you see an outgoing synchronization which does not have a corresponding incoming synchronization in the other DC, the ArangoSync abort outgoing sync command is needed.

Restart synchronization in the opposite direction:

arangosync configure sync --master.endpoint=https://sync-dc1-node01:8532 --master.cacert=tls-ca.crt --source.cacert=tls-ca.crt --master.keyfile=client-auth.keyfile --auth.user=root --auth.password=<password> --source.endpoint=https://sync-dc2-node01:8532More details on the ArangoSync CLI and its options can be found in our documentation at:

https://www.arangodb.com/docs/stable/administration-dc2-dc.html

Concluding words

As I wrote at the very beginning of this blog post, we have thought long and hard about an ideal topic and we’re very excited that a lot of our users want to use ArangoSearch either to test or in production. We truly hope that this is a great start for those looking into a rock-solid replicated database environment and wish you serious heaps of fun rolling it out and check out the benefits!

Continue Reading

A Comprehensive Case-Study of GraphSage using PyTorchGeometric and Open-Graph-Benchmark

ArangoBNB Data Preparation Case Study: Optimizing for Efficiency

Estimated reading time: 18 minutes

This case study covers a data exploration and analysis scenario about modeling data when migrating to ArangoDB. The topics covered in this case study include:

- Importing data into ArangoDB

- Developing Application Requirements before modeling

- Data Analysis and Exploration with AQL

This case study can hopefully be used as a guide as it shows step-by-step instructions and discusses the motivations in exploring and transforming data in preparation for a real-world application.

The information contained in this case study is derived from the development of the ArangoBnB project; a community project developed in JavaScript that is always open to new contributors. The project is an Airbnb clone with a Vue frontend and a React frontend being developed in parallel by the community. It is not necessary to download the project or be familiar with JavaScript for this guide. To see how we are using the data in a real-world project, check out the repository.

Data Modeling Example

Data modeling is a broad topic and there are different scenarios in practice. Sometimes, your team may start from scratch and define the application’s requirements before any data exists. In that case, you can design a model from scratch and might be interested in defining strict rules about the data using schema validation features; for that topic, we have an interactive notebook and be sure to see the docs. This guide will focus on the situation where there is already some data to work with, and the task involves moving it into a new database, specifically ArangoDB, as well as cleaning up and preparing the data to use it in a project.

Preparing to migrate data is a great time to consider new features and ways to store the data. For instance, it might be possible to consolidate the number of collections being used or store the data as a graph for analytics purposes when coming from a relational database. It is crucial to outline the requirements and some nice-to-haves and then compare those to the available data. Once it is clear what features the data contains and what the application requires, it is time to evaluate the database system features and determine how the data will be modeled and stored.

So, the initial steps we take when modeling data include:

- Outline application requirements and optional features

- Explore the data with those requirements in mind

- Evaluate the database system against the dataset features and application requirements

As you will see, steps 2 and 3 can easily overlap; being aware of database system features can give you ideas while exploring the data and vice versa. This overlap is especially common when using the database system to explore, as we do in this example.

For this example, we are using the Airbnb dataset initially found here. The dataset contains listing information scraped from Airbnb, and the dataset maintainer provides it in CSV and GeoJSON format.

The files provided, their descriptions, and download links are:

NOTE: The following links are outdated and interested parties should use the recent links available at InsideAirBnB

- Listings.csv.gz

- Detailed Listings data for Berlin

- Download Link

- Calendar.csv.gz

- Detailed Calendar Data for listings in Berlin

- Download Link

- Reviews.csv.gz

- Detailed Review Data for listings in Berlin

- Download Link

- Listings.csv

- Summary information and metrics for listings in Berlin (good for visualisations).

- Download Link

- Reviews.csv

- Summary Review data and Listing ID (to facilitate time based analytics and visualisations linked to a listing).

- Download Link

- Neighborhoods.csv

- Neighbourhood list for geo filter. Sourced from city or open source GIS files.

- Download Link

- Neighborhoods.geojson

- GeoJSON file of neighbourhoods of the city.

- Download Link

The download links listed here are for 12-21-2020, which we used just before insideairbnb published the 02-22-2021 links. If they don’t work for some reason, you can always get the updated ones from insideairbnb, but there is no guarantee that they will be compatible with this guide.

Application Requirements

Looking back at the initial steps we typically take, the first step is to outline the application requirements and nice-to-haves. One could argue that doing data exploration might be necessary before determining the application requirements. However, knowing what our application requires can inform decisions when deciding how to store the data, such as extracting or aggregating data from other fields to fulfill an application requirement.

For this step, we had multiple meetings where we outlined our goals for the application. We have the added benefit of already knowing the database system we will be using and being familiar with its capabilities.

There are a couple of different motivations involved in this project. For us, ArangoDB, we wanted to do this project to:

- Showcase the upcoming ArangoSearch GeoJSON features

- Provide a real-world full stack JavaScript application with a modern client-side frontend framework that uses the ArangoJS driver to access ArangoDB on the backend.

With those in mind, we continued to drill down into the actual application requirements. Since this is an Airbnb clone, we started by looking on their website and determining what was likely reproducible in a reasonable amount of time.

Here is what we started with:

- Search an AirBnB dataset to find rentals nearby a specified location

- A draggable map that shows results based on position

- Use ArangoSearch to keep everything fast

- Search the dataset using geographic coordinates

- Filter results based on keywords, price, number of guests, etc

- Use AQL for all queries

- Multi-lingual support

We set up the GitHub repository and created issues for the tasks associated with our application goals to define further the required dataset features. Creating these issues helps in thinking through the high-level tasks for both the frontend and backend and keeps us on track throughout.

Data Exploration

With our application requirements ready to go, it is time to explore the dataset and match the available data with our design vision.

One approach is to reach for your favorite data analysis tools and visualization libraries such as the Python packages Pandas, Plotly, Seaborn, or many others. You can look here for an example of performing some basic data exploration with Pandas. In the notebook, we discover the available fields, data types, consistency issues and even generate some visualizations.

For the rest of this section, we will look at how you can explore the data by just using ArangoDB’s tools, the Web UI, and the AQL query language. It is worth noting that there are many third-party tools available for analyzing data, and using a combination of tools will almost always be necessary. The purpose of this guide is to show you how much you can accomplish and how quickly you can accomplish it just using the tools built into ArangoDB.

Importing CSV files

First things first, we need to import our data. When dealing with CSV files, the best option is to use arangoimport. The arangoimport tool imports either JSON, CSV, or TSV files. There are different options available to adjust the data during import to fit the ArangoDB document model better. It is possible to specify things such as:

- Fields that it should skip during import

- Whether or not to convert values to non-string types (numbers, booleans and null values)

- Options for changing field names

System Attributes

Aside from the required options, such as server information and collection name, we will use the `--translate` option. We are cheating a little here for the sake of keeping this guide as brief as possible. We already know that there is a field in the listings files named id that is unique and perfectly suited for the _key system attribute. This attribute is automatically generated if we don’t supply anything, but can also be user-defined. This attribute is automatically indexed by ArangoDB, so having a meaningful value provided here means that we can perform quick and useful lookups against the _key attribute right away, for free.

In ArangoDB system attributes cannot be changed, the system attributes include:

- _key

- _id (collectionName/_key)

- _rev

- _from (edge collection only)

- _to (edge collection only)

For more information on system attributes and ArangoDB’s data model, see the guide available in the documentation. To set a new _key attribute later, once we have a better understanding of the available data, we would need to create a new collection and specify the value to use; we get to skip that step.

Importing Listings

For our example, we import the listings.csv.gz file, which per the website description, contains detailed listings data for Berlin.

The following is the command to run from the terminal once you have ArangoDB installed and the listings file unzipped.

arangoimport --file .\listings.csv --collection "listings" --type csv --translate "id=_key" --create-collection true --server.database arangobnb

Once the import is complete, you can navigate to the WebUI and start exploring this collection. If you are following along locally, the default URL for the WebUI is 127.0.0.1:8529.

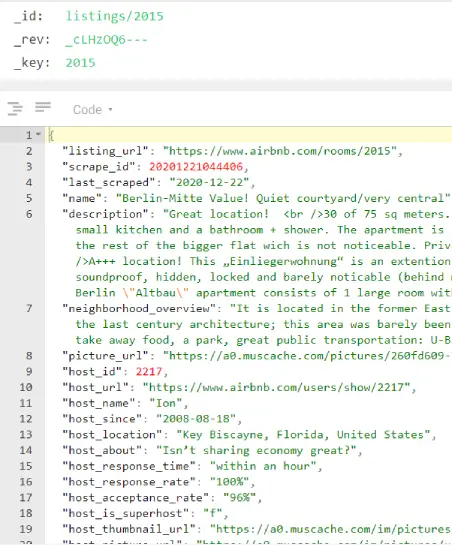

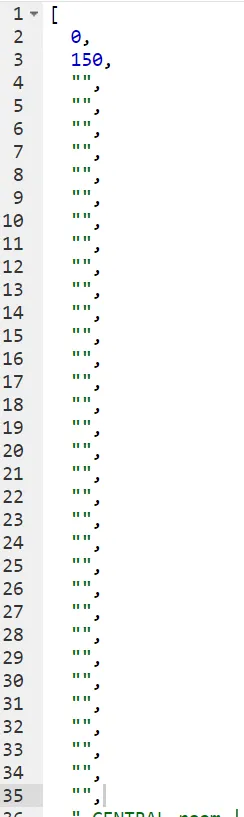

Once you open the listings collection, you should see documents that look like this:

Analyzing the Data Structure

The following AQL query aggregates over the collection and counts the number of documents with that same field, what those fields are, and their data types. This query provides insight into how consistent the data is and can point out any outliers in our data. When running these types of queries it may be a good idea to supply a LIMIT to avoid aggregating over the entire collection, it depends on how important it is to check every single document in the collection.

FOR doc IN listings

FOR a IN ATTRIBUTES(doc, true)

COLLECT attr = a, type = TYPENAME(doc[a]) WITH COUNT INTO count

RETURN {attr, type, count}

Query Overview:

This query starts with searching the collection and then evaluates each document attribute using the ATTRIBUTES function. System attributes are deliberately ignored by setting the second argument to true. The COLLECT keyword signals that we will be performing an aggregation over the attributes of each document. We define two variables that we want to use in our return statement: the attribute name assigned to the `attr` variable and the type variable for the data types. Using the TYPENAME() function, we capture the data type for each attribute. With an ArangoDB aggregation, you can specify that you want to count the number of items by adding `WITH COUNT INTO` to your COLLECT statement followed by the variable to save the value into; in our case, we defined a `count` variable.

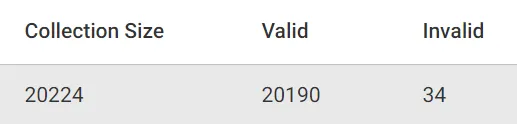

The results show that about half of the fields have a count of 20,224 (the collection size), while the rest have varying numbers. A schema-free database’s flexibility means understanding that specific values may or may not exist and planning around that. In our case, we can see that a good number of fields don’t have values. Since we are thinking about this data from a developer’s perspective, this will be invaluable when deciding which features to incorporate.

Data Transformations

The results contain 75 elements that we could potentially consider at this point, and a good place to start is with the essential attributes for our application.

Some good fields to begin with include:

- Accommodates: For the number of Guests feature

- Amenities: For filtering options such as wi-fi, hot tub, etc.

- Description: To potentially pull keywords from or for the user to read

- Review fields: For a review related feature

- Longitude, Latitude: Can we use this with our GeoJSON Analyzer?

- Name: What type of name? Why are two of the names a number?

- Price: For filtering by price

We have a lot to start with, and some of our questions will be answered easiest by taking a look at a few documents in the listings collection. Let’s move down the list of attributes we have to see how they could fit the application.

Accommodates

This attribute is pretty straightforward as it is simply a number, and based on our type checking; all documents contain a number for this field. The first one is always easy!

AmenitiesThe amenities appear to be arrays of strings, but encoded as JSON string. Being a JSON array is either a result of the scraping method used by insideAirbnb or placed there for formatting purposes. Either way, it would be more convenient to store them as an actual array in ArangoDB. The JSON_PARSE() AQL function to the rescue! Using this function, we can quickly decode and store the amenities as an array all at once.

FOR listing IN listings

LET amenities = JSON_PARSE(listing.amenities)

UPDATE listing WITH { amenities } IN listings

Query Overview:

This query iterates over the listings collection and declares a new `amenities` variable with the LET keyword. We finish the FOR loop by updating the document with the JSON_PARSE’d amenities array. The UPDATE operation replaces pre-existing values, which is what we want in this situation.

Description

Here is an example of a description of a rental location:

As you can see, this string contains some HTML tags, primarily for formatting, but depending on the application, it might be necessary to remove these characters to avoid undesired behavior. For this sort of text processing, we can use the AQL REGEX_REPLACE() function. We will be able to use this HTML formatting in our Vue application thanks to the v-html Vue directive, so we won’t remove the tags. However, for completeness, here is an example of what that function could look like:

FOR listing IN listingsRETURN REGEX_REPLACE(listing.description, "<[^>]+>\s+(?=<)|<[^>]+>", " ")

Query Overview:

This query iterates through the listings and uses REGEX_REPLACE() to match HTML tags and replaces them with spaces. This query does not update the documents as we want to make use of the HTML tags. However, you could UPDATE the documents instead of just returning the transformed text.

Reviews

For the fields related to reviews, it makes sense that they would have different numbers compared to the rest of the data. Some listings may have never had a review, and some will have more than others. The review data types are consistent, but not every listing has one. Handling reviews is not a part of our initial application requirements, but in a real-world setting, they likely would be. We had not discussed reviews during planning as this site likely won’t allow actual users to sign up for it.

Knowing that our data contains review information gives us options:

- Do we consider removing all review information from the dataset as it is unnecessary?

- Or, leave it and consider adding review components to the application?

This type of question is common when considering how to model data. It is important to consider these sorts of questions for performance, scalability, and data organization.

Eventually, we decided to use reviews as a way to sort the results. As of this writing, we have not implemented a review component that shows the reviews, but if any aspiring JavaScript developer is keen to make it happen, we would love to have another contributor on the project.

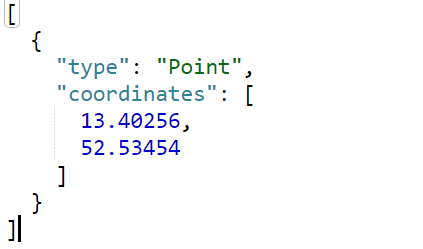

LocationWhen we started the project, we knew that this dataset contained location information. It is a dataset about renting properties in various locations, after all. The location data is stored as two attributes; longitude and latitude. However, we want to use the GeoJSON Analyzer which requires a GeoJSON object. We prefer to use GeoJSON as it can be easier to work with since, for example, the order of the coordinate pairs isn’t always consistent in datasets and the GeoJSON analyzer supports more than just points, should our application need that. Fortunately, since these values represent a single point, converting this data to a valid GeoJSON object is a cinch.

FOR listing IN listingsUPDATE listing._keyWITH {"location": GEO_POINT(listing.longitude, listing.latitude)}IN listings

Query Overview:

This query UPDATEs each listing with a new location attribute. The location attribute contains the result of the GEO_POINT() AQL function, which constructs a GeoJSON object from longitude and latitude values.

Note: Sometimes, it is helpful to see the output of a function before making changes to a document. To just see the result of an AQL function such as the GEO_POINT() function we used above, you could simply RETURN the result, like so:

FOR listing IN listings LIMIT 1 RETURN GEO_POINT(listing.longitude, listing.latitude)

Query Overview:

This query makes no changes to the original document. It simply selects the first available document and RETURNs the result of the GEO_POINT() function. This can be helpful for testing before making any changes.

Name

The name value spurred a couple of questions after the data type query that we will attempt to answer in this section.

- What is the purpose of the name field?

- Why are there numeric values for only 2 of them?

The first one is straightforward to figure out by opening a document and seeing what the name field contains. Here is an example of a listing name:

The name is the title or a tagline for the rental; you would expect to see it when searching for a property. We will want to use this for our rental titles, so it makes sense to dig a little deeper to find any inconsistencies. Let’s figure out why some have numeric values and if they should be adjusted. With AQL queries, sorting in ascending order starts with symbols and numbers; this gives us an easy option to look at the listings with numeric values for the name field. We will evaluate the documents more robustly in a moment but first, let’s just have a look.

FOR listing in listingsSORT listing.name ASCRETURN listing.name

Query Overview:

This query simply returns the listings sorted in ascending order. We explicitly declare ASC for ascending, but it is also the default SORT order.

We see the results containing the numbers we were expecting, but we also see some unexpected results; some empty strings for name values. Depending on how important this is to the application, it may be necessary to update these empty fields with something indicating a name was not supplied and perhaps also make it a required field for future listings.

If we return the entire listing, instead of just the name, they all seem normal and thus might be worth leaving in as they are still potentially valid options for renters.

We know that we have 34 values with invalid name attributes with the previous results, but what if we were unsure of how many there are because they didn’t all show up in these results?

FOR listing in listingsFILTER HAS(listing, "name")ANDTYPENAME(listing.name) == "string"ANDlisting.name != ""COLLECT WITH COUNT INTO cRETURN {"Collection Size": LENGTH(listings),"Valid": c,"Invalid": SUM([LENGTH(listings), -c])}

Query Overview:

This query starts with checking that the document HAS() the name attribute. If it does have the name attribute, we check that the data type of the name value has a TYPENAME() of "string". Additionally, we check that the name value is not an empty string. Finally, we count the number of valid names and subtract them from the number of documents in the collection. This provides us with the number of valid and invalid listing names in our collection.

A developer could update this type of query with other checks to evaluate data validity. You could use the results of the above query to potentially motivate a decision for multiple things, such as:

- Is this enough of an issue to investigate further?

- Is there potentially a problem with my data?

- Do I need to cast these values TO_STRING() or leave them as is?

The questions of these depend on the data size and complexity, as well as the application.

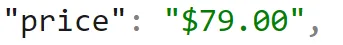

Price

The final value we will take a look at is the price. Our data type results informed us that the price is a string, and while looking at the listings, we saw that they contain the dollar sign symbol.

Luckily, ArangoDB has an AQL function that can cast values to numbers, TO_NUMBER().

FOR listing IN listingsUPDATE listing WITH{price: TO_NUMBER(SUBSTRING(SUBSTITUTE(listing.price, ",",""), 1))}IN listings

Query Overview:

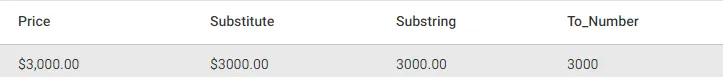

There is kind of a lot going on with this query so let’s start by evaluating it from the inside out.

We begin with the SUBSTITUTE() function, checking for commas in the price (they are used as thousands separator). This step is necessary because the TO_NUMBER() function considers a value with a comma an invalid number and would set the price to 0.

Next, we need to get rid of the $ as it would also not be considered a valid number. This is where SUBSTRING() comes into play. SUBSTRING() allows for providing an offset number to indicate how many values we want to remove from the beginning of the string. In our case, we only want to remove the first character in the string, so we provide the number 1.

Finally, we pass in our now comma-less and symbol-less value to the TO_NUMBER() function and UPDATE the listing price with the numeric representation of the price.

As mentioned previously, it is sometimes helpful to RETURN values to get a better idea of what these transformations might look like before making changes. This query provides a better understanding of what exactly is happening in this query:

FOR listing IN listingsLIMIT 1RETURN {Price: listing.price,Substitute: SUBSTITUTE(listing.price, ",",""),Substring: SUBSTRING(SUBSTITUTE(listing.price, ",",""), 1),To_Number: TO_NUMBER(SUBSTRING(SUBSTITUTE(listing.price, ",",""), 1))}

Conclusion

Other fields could potentially be updated, changed, or removed, but those are all we will cover in this guide. As the application is developed, there will likely be even more changes that need to occur with the data, but we now have a good starting point.

Hopefully, this guide has also given you a good idea of the data exploration capabilities of AQL. We certainly didn’t cover all of the AQL functions that could be useful for data analysis and exploration but enough to get started. To continue exploring these, be sure to review the type check and cast functions and AQL in general.

Next Steps..

With the data modeling and transformations complete, some next steps would be to:

- Explore the remaining collections

- Configure a GeoJSON Analyzer

- Create an ArangoSearch View

- Consider indexing requirements

- Start building the app!

Hear More from the Author

Continue Reading

ArangoDB Assembles 10,000 GitHub Stargazers

State of the Art Preprocessing and Filtering with ArangoSearch

Estimated reading time: 10 minutes

Just in case you haven’t heard about ArangoSearch yet, it is a high-performance Full-Text Search engine integrated in ArangoDB (meaning connected with the other data-models and AQL). Feel free to check out ArangoSearch – Full-text search engine including similarity ranking capabilities for more details.

In ArangoDB version 3.7 the ArangoSearch team added Fuzzy Search support (see the comprehensive article Fuzzy search by Andrey Abramov). With Fuzzy Search data preprocessing and filtering becomes even more important. In the upcoming ArangoDB 3.8 release, ArangoSearch efforts will be focused on improving this part. In this post I’m going to uncover some of the new features we are proud to present.

(more…)ArangoDB 3.4 GA

Full-text Search, GeoJSON, Streaming & More

The ability to see your data from various perspectives is the idea of a multi-model database. Having the freedom to combine these perspectives into a single query is the idea behind native multi-model in ArangoDB. Extending this freedom is the main thought behind the release of ArangoDB 3.4.

We’re always excited to put a new version of ArangoDB out there, but this time it’s something special. This new release includes two huge features: a C++ based full-text search and ranking engine called ArangoSearch; and largely extended capabilities for geospatial queries by integrating Google™ S2 Geometry Library and GeoJSON. Read more

RC1 ArangoDB 3.4 – What’s new?

For ArangoDB 3.4 we already added 100,000 lines of code, happily deleted 50,000 lines and changed over 13,000 files until today. We merged countless PRs, invested months of problem solving, hacking, testing, hacking and testing again and are super excited to share the feature complete RC1 of ArangoDB 3.4 with you today. Read more

ArangoSearch architecture overview

In this article, we’re going to dive deeper into our recently released feature preview in Milestone ArangoDB 3.4 – ArangoSearch which provides a rich set of information retrieval capabilities. In particular, we’ll give you an idea of how our search engine works under the hood.

Essentially ArangoSearch consists of 2 components: A search engine and an integration layer. The former is responsible for managing the index, querying and scoring, whereas latter exposes search capabilities to the end user in a convenient way.

Milestone ArangoDB 3.4:

ArangoSearch – Information retrieval with ArangoDB

For the upcoming ArangoDB 3.4 release we’ve implemented a set of information retrieval features exposed via new database object `View`. The `View` object is meant to be treated as another data source accessible via AQL and the concept itself is pretty similar to a classical “materialized” view in SQL.

While we are still working on completing the feature, you can already try our retrieval engine in the Milestone of the upcoming ArangoDB 3.4 released today. Read more

Get the latest tutorials,

blog posts and news:

Thanks for subscribing! Please check your email for further instructions.

Skip to content

Skip to content